I often get asked about my design process, so wanted to write a piece on how I figure out what to do next.

The difference between a process and a standard

Design Thinking and User Centred Design are important frameworks that help us to communicate and standardise good practice. However, it's important to understand whether a standardised process is always the best approach.

When designing things like public services, broad user groups require consistent experiences across multiple channels. There's a high risk that if strictly defined processes aren't followed, the needs of some users might be missed.

In this context, a process changes from the best way to use available resources to achieve the desired outcome; into a method for checking a large network of teams aren't cutting corners. In short: this is a standard, not a process.

The GDS service standard is an example of when a strict, uniform process is important

Understanding what not to do when there's never enough time

In commercial environments (and especially in smaller organisations) a strictly standardised process is rarely useful at anything other than a very high level. I've spent a fair bit of time with product teams attempting to define "their process". These efforts are well-intentioned but, with deadlines looming, corners are often cut as soon as the agreed process is defined.

Most designers broadly understand what an ideal process looks like:

Understand business and user problems

Define the difference you hope to make and how to measure it

Explore solutions

Gather feedback early and often

Develop the highest impact solution in the available time

Evaluate results and iterate

This kind of outline is fine but under each of those steps are hundreds of possible interpretations, approaches and rabbit holes. I often find that rather than attempting to align teams around a strict, linear process, it's better to help them understand the risk and potential of moving on regardless.

The importance of risk and confidence

In Marty Cagan's book Inspired he talks about four types of risks organisations face when developing a product.

The four big risks

These can been seen as high level project risks and it's important to assess and understand these as early as possible.

By the time a project lands on a designer's desk, there's usually a back story behind how it's come about. Uncovering that story is really important. It can help gain an understanding of the knowledge that already exists from previous work and test the assumptions that have been made along the way.

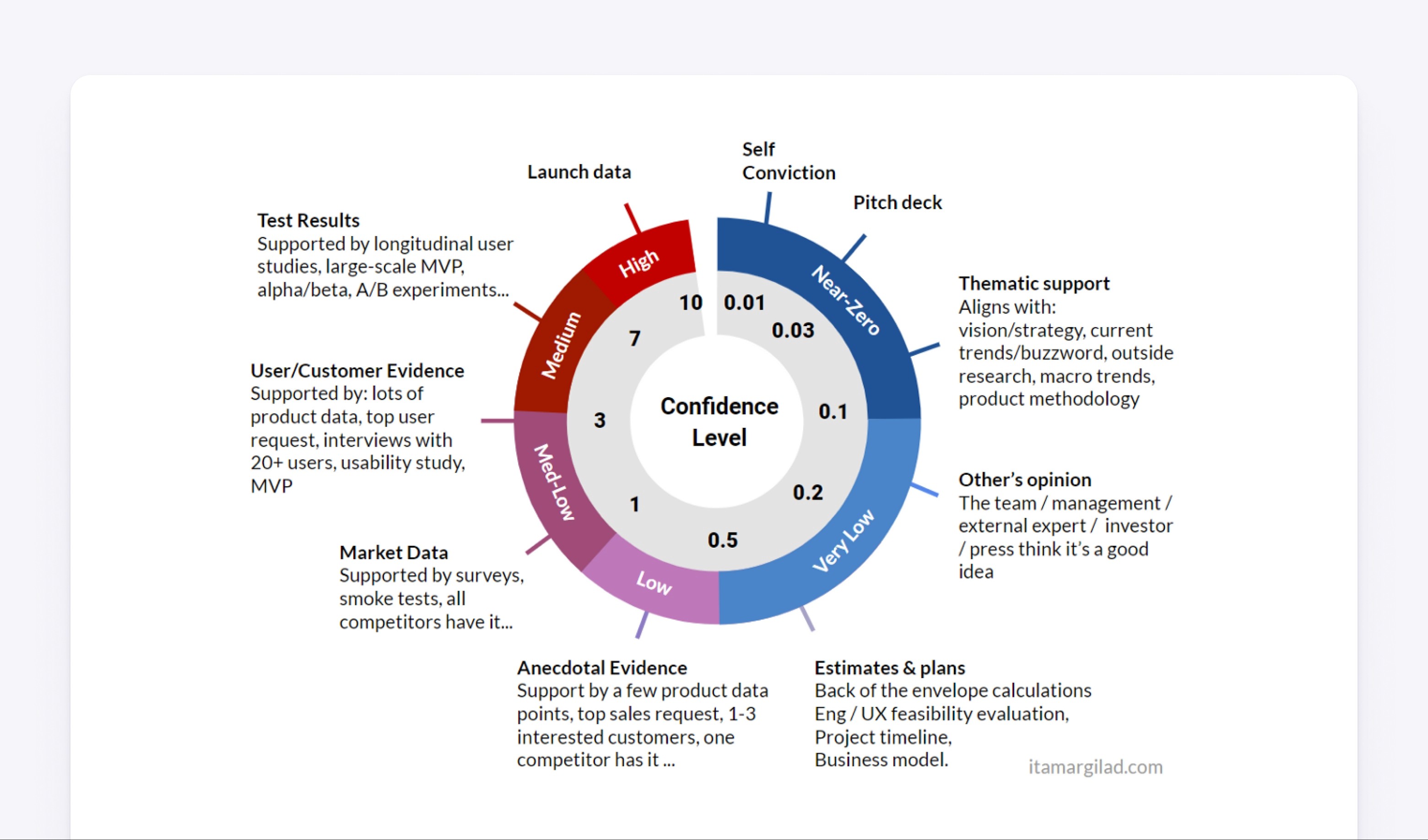

Prioritisation activities often uses the concept of "impact" to estimate how much an idea will affect a key metric. However, that estimate might have wildly varying scores or suffer from confirmation bias (especially if it's the CEOs pet project). Integrating Itamar Gilad's confidence meter into prioritisation methods is a great way to weed out these assumptions. If confidence scores are high, then you can probably skip a few steps in your ideal process. However, if confidence scores are low, you may need to spend a bit longer on whatever stage you're currently at.

An illustration showing how Itamar Gilad defines levers of confidence

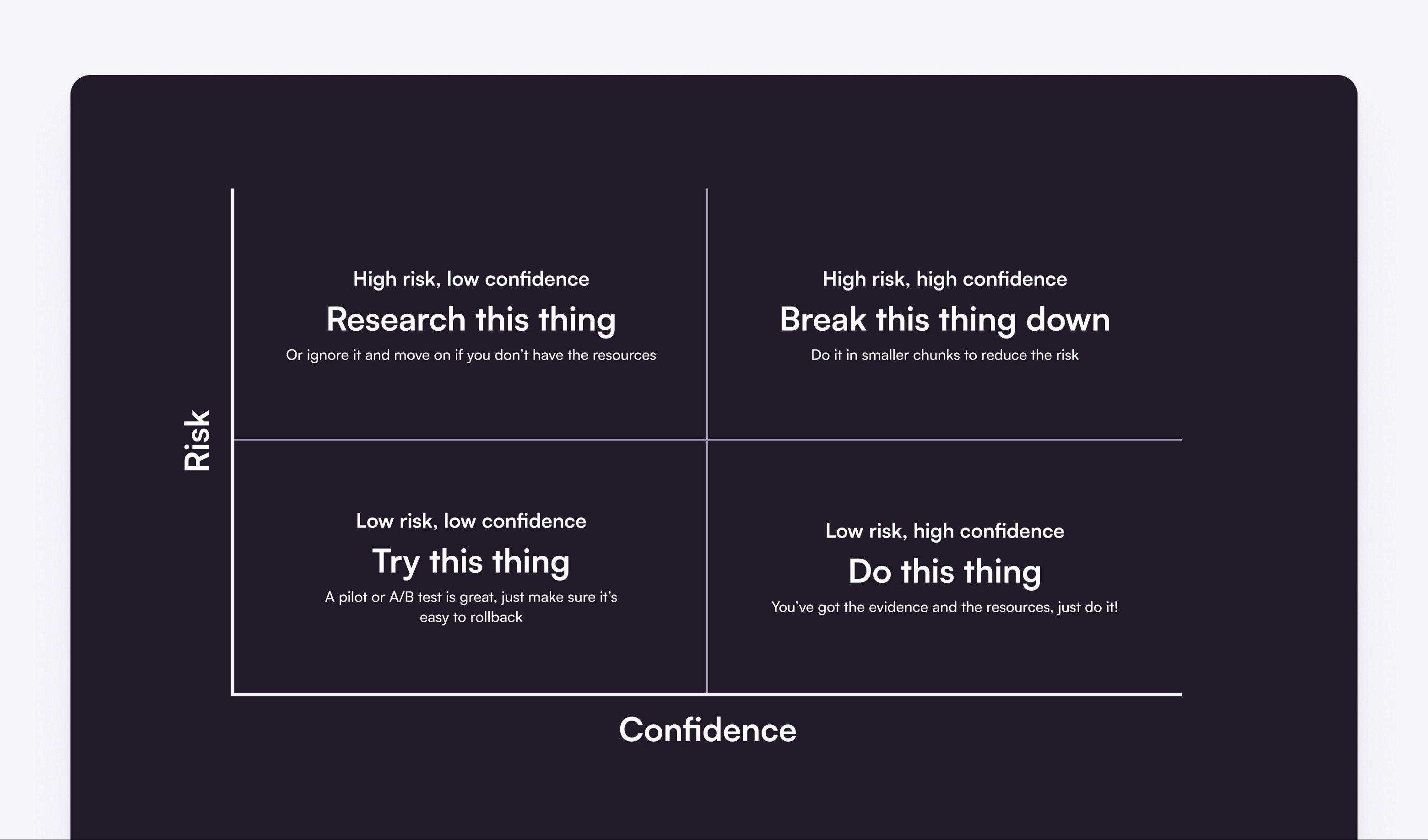

Whether you're planning a new feature or just trying to figure out which activity to do next, using some sort of risk vs confidence assessment can help you understand how best to use available resources.

An illustration of a Risk Vs Confidence prioritisation matrix

Focusing on outcomes

Hopefully, using some of these methods helps you get to the point where "doing less" isn't necessarily the same as "lowering quality".

If you find that building confidence in a certain area is a recurring problem, it might be worth creating rituals that focus on understanding more about that part of your process outwith a time-bound project. For example, Teresa Torres suggests automating customer interviews so they become a habitual part of your weekly schedule.

Whatever you choose to do next, asking how it contributes to the desired outcome is a great practice to establish. Josh Seiden defines an outcome as "A change in human behaviour that drives business results". If those humans find value in what you've built, they'll rarely care about how you built it.